[ad_1]

Within a decade, an independent developer, working alone, will create a game with the size, scope and artistry of Elden Ring.

And then a millions of other people will do the same thing.

How is it possible that a single person will do the work of a team that cost over $100,000,000 to create? Or that a million people could each create a game of this magnitude?

Why does it cost hundreds of millions of dollars to make AAA games in the first place? The answer is usually related to content: there’s an enormous amount of bricklaying, brick manufacturing and artisanal brick-crafting in the business of game-making.

But in the future, game-makers may be more like composers than brick-layers. This will be supported by an emerging field called Computational Creativity. The Association for Computational Creativity defines the purpose of this field as:

to construct a program or computer capable of human-level creativity

to better understand human creativity and to formulate an algorithmic perspective on creative behavior in humans

to design programs that can enhance human creativity without necessarily being creative themselves

Part of this creativity — especially the aspect of enhancing human creativity — will be supported by composable frameworks, which I wrote about previously.

The other major force is artificial intelligence (AI) technologies that are able to generate creative works. Most of the remainder of this article will focus on the astonishing developments already happening in this field.

Disco Diffusion is an online tool that runs inside Google Collab, a platform that lets you execute Python programs that can run on cloud-based GPUs. It uses a learning model that results in startling “creative” artwork. Here is a small collection of the 25 monsters I created with it, using about 5 minutes of human effort (plus 24 hours of computing time):

Now, I’ll admit that these monsters are not as amazing and interesting as you’ll find in Elden Ring itself. Not even close. But they’re still very impressive, considering a computer made it with minimal input from me. If it is this good now, imaging how good will it be in a decade.

Here is another piece I composed, Aftermath of War. This is another creation from Disco Diffusion, experimenting with the theme of “sunflowers” and destroyed tanks. Here, we see the ability of the AI model to not only reproduce the artistic style of something like Elden Ring — but also to fuse together ideas that may have never been combined before:

Some people react to AI with alarm — are we just going to let the machines take over? Others see it as lacking the humanity of the creative process. In the Procedural Art group I participate in on Facebook, I received this comment in response to Aftermath of War:

My problem with AI art is that the value is in the human side: the idea, the language used to express it, the data set used, the eyes that perceive it. So, like in this case, why not humanise as well the act of painting the image?

I understand these concerns. What do we lose when we ask a computer to do what only humans could do in the past?

In my case, I can’t paint — at least not as well as the computer did with Aftermath of War.

But perhaps the human aspect of this is that we don’t really paint with paintbrushes. We paint with ideas. A comment from the same group:

Now, I happen to believe that when computationally-generated paintings become commonplace — that the best artisanal human-crafted paintings may become scarce and therefore more valuable. But “painting with ideas” is a way to exponentially increase the rate of human output — shifting work towards ideation, iteration and composition.

To populate an immersive world — a game, the metaverse, a simulation — you’ll need to go from digital paintings to 3D models. A 3D model defines a set of geometry that defines the shape of the object in space. This paper shows how the properties of 3D models can be learned, and new versions generated according to the learned properties.

Newer learning models are teaching computers how to generate a 3D object from an image, as shown in this explanatory video demonstrating how you could rapidly increase the speed of building game worlds, by using photos to generate novel 3D models:

These earlier technologies — frequently the domain of research papers and awkward Python scripts — are being productized. This demo from Kaedim shows how you can create a 3D mesh from some concept art:

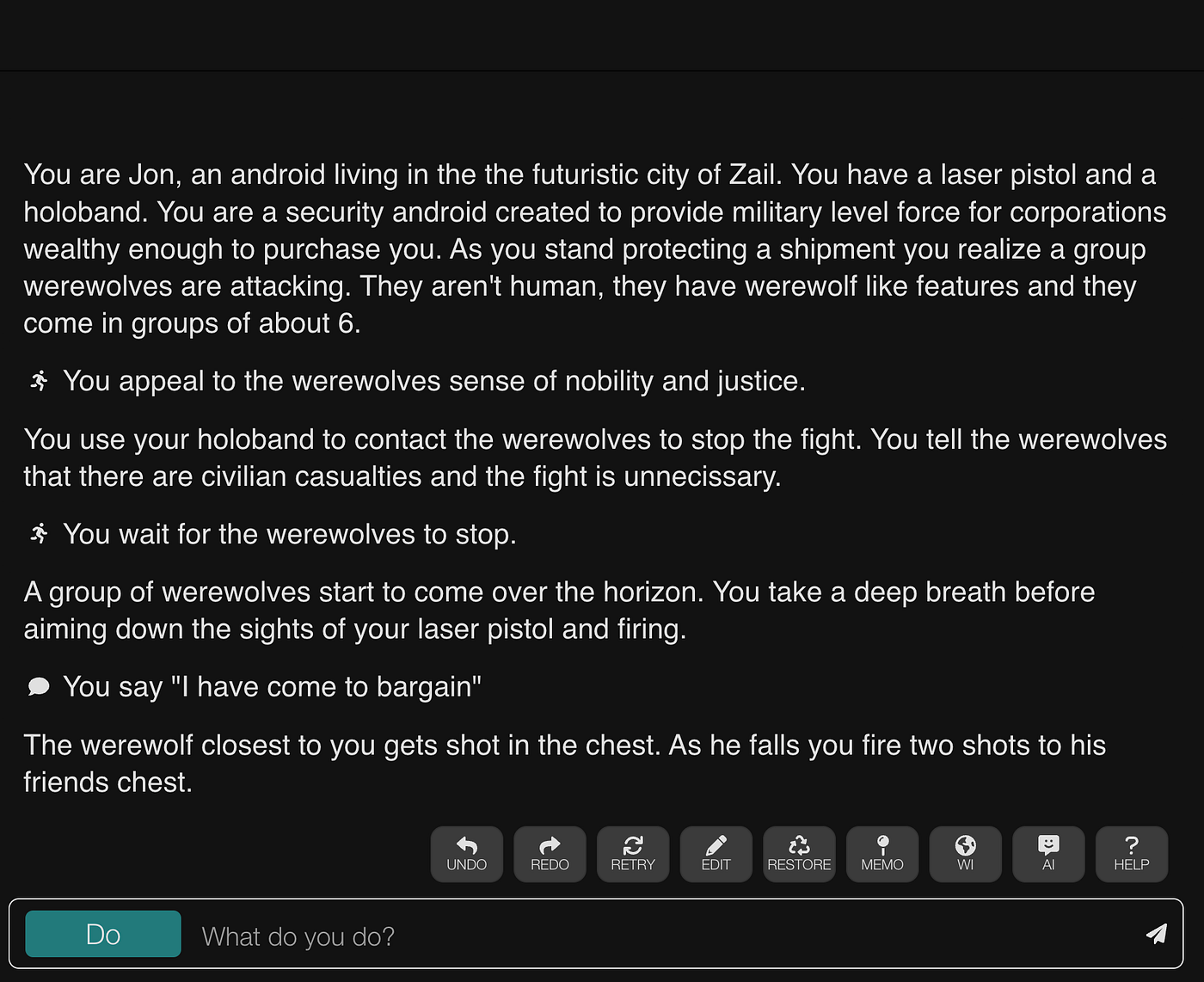

Language models such as GPT-3 are capable of interpreting and generating surprisingly human text. Here is a generated story inside AIDungeon:

The more recent Google Pathways model, with over 540 billion parameters, is even more advanced than GPT-3: it can explain jokes, follow a chain of reasoning, recognize patterns, perform Q&A sessions on scientific knowledge, and summarize texts. As more parameters are added to language models, the depth of “understanding” they can demonstrate is expanding:

Here it is explaining a joke:

If an AI can generate 3D models and understand stories — they can also use language prompts to help you compose entire worlds. That is precisely what Promethean AI seeks to do:

The power of a system like Promethean comes from its composability; it isn’t simply using AI to generate the objects and settings (although that’s amazing). The power of systems like this is in the iteration, aggregation, tweaking. Having a scaffolding for your ideas is a force-multiplier in one’s creative output, just as modders have found in Minecraft, Roblox and other frameworks.

Yannic Kilcher used learning models called CLIP and BigGAN to generate a music video to go with some lyrics he wrote. The lyrics are basically meaningless, other than having a lot of words that would be interesting to display through the image-generation models:

AI can also be used to generate code. Here’s a minimal version of a Wordle-type game that Andrew Mayne created using a few lines of input into OpenAI Davinci:

Artificial intelligence is contributing to the field of computational creativity at a furious pace; the pace will continue at an exponential rate.

As frameworks for composability make it easier to connect pieces of creative content together — and AI fuels a wide variety of iterative content creation — it will result in an even-more exponential increase in creative output from individuals and small teams.

Thanks to the composability of platforms like Minecraft and Roblox, people are crafting experiences that could have never been built as commercial games. The next era of creativity — which will include the ability to rapidly iterate ideas with the help of artificial intelligence — will include experiences we couldn’t even imagine to begin with.

[ad_2]